Design Template by Anonymous

Types and Methods of Usability Testing

Usability testing isn’t a one-size-fits-all process. It adapts to the needs of each team and project. The goal is always the same: to learn how real users interact with a design. But the way that goal is achieved can vary widely depending on budget, timeline, resources, and the stage of development. Knowing the main types of usability testing helps teams decide which method will deliver the clearest insights at the right time.

Remote vs. In-Person Testing

Remote usability testing allows participants to engage from their own environment. Sessions are typically conducted using video conferencing tools or usability platforms that capture screen recordings, mouse movements, and user commentary. This approach is cost-effective, fast to deploy, and ideal for reaching a diverse audience. Testers can be located anywhere, making it possible to gather feedback from different geographic regions or time zones.

Comparison of in-person and remote usability testing approaches.

In-person testing is more traditional. The facilitator and participant share the same space. This allows the observer to pick up on subtle behaviors like posture, facial expressions, or moments of hesitation that may not be visible in a screen recording. In-person testing also supports hands-on prototypes and physical products, making it essential for testing beyond the screen. While it often takes more time and resources, it provides a rich stream of observational data that supports qualitative insights.

Moderated vs. Unmoderated Testing

Moderated testing includes a facilitator who actively engages with the participant during the test. The facilitator may ask follow-up questions, provide clarification, or adapt tasks based on the participant’s behavior. This format works well when the test involves complex flows or new features that need explanation. Moderated sessions also allow for deeper qualitative discovery through conversation and reflection.

Unmoderated testing is self-directed. Participants are given instructions and tasks, then left to complete them on their own. These sessions are usually conducted through online platforms that collect interaction data and responses. Unmoderated tests are faster to scale and easier to schedule, as no live observation is required. They’re useful for evaluating basic usability and gathering broad performance metrics like task success rates and navigation patterns.

Example of moderated and unmoderated testing formats.

Qualitative vs. Quantitative Testing

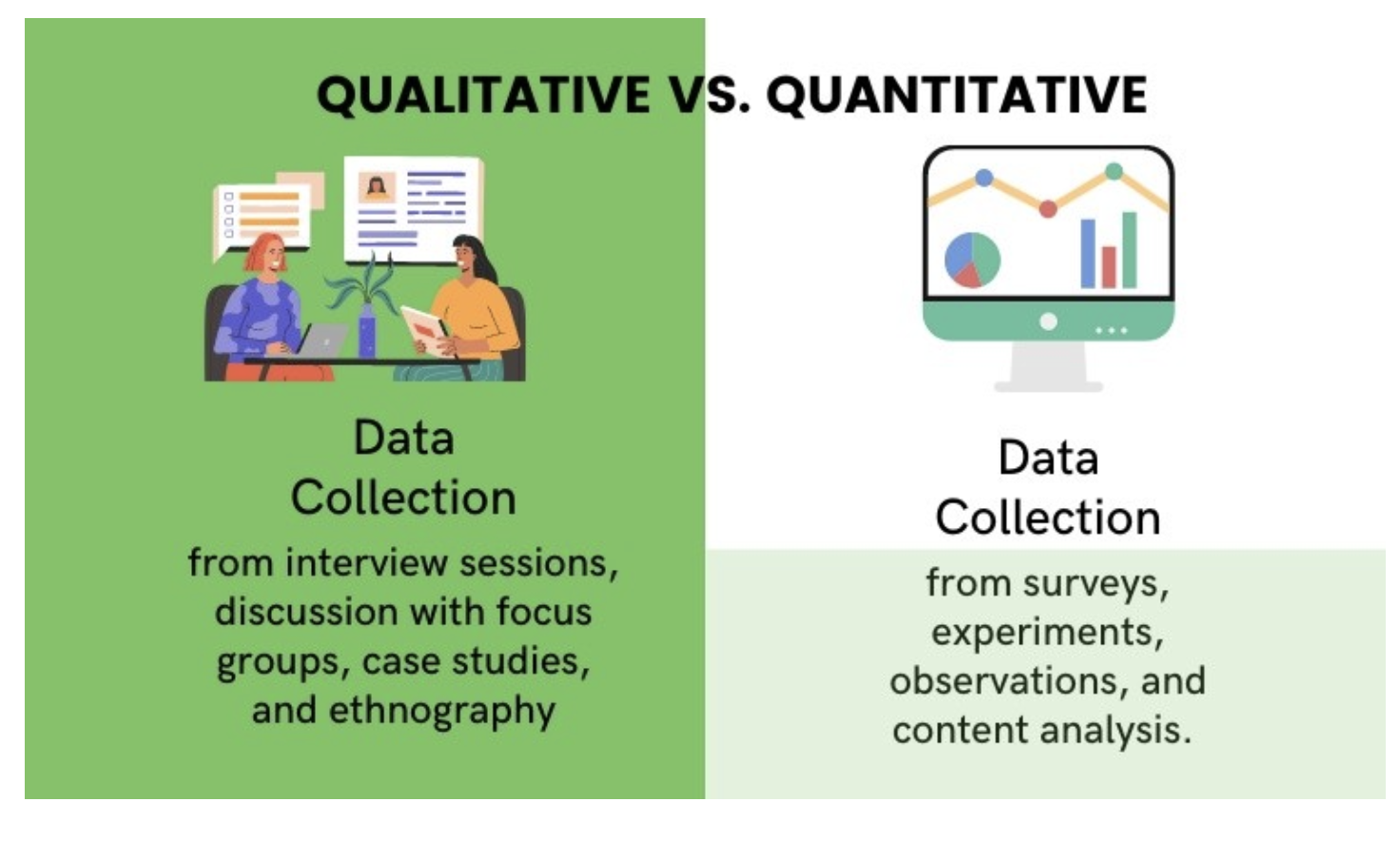

Qualitative usability testing captures the thoughts, emotions, and behaviors of users. It focuses on why users act the way they do. Testers often speak their thoughts aloud while performing tasks, giving facilitators a window into their expectations and confusion points. Notes are taken on what users say, where they hesitate, and which interactions seem effortless or difficult.

Quantitative testing deals with numbers. It measures how long tasks take, how often users succeed, how many errors occur, and how frequently users backtrack. These metrics are especially helpful when teams need benchmarks or want to track progress across iterations. For example, if a previous version of a checkout form had a 40% abandonment rate and the new version has 10%, that’s a clear sign of improvement.

Both approaches have value. Qualitative feedback tells the story, while quantitative data provides measurable proof. Many usability tests use a hybrid method—collecting observations while also tracking success rates and time on task—to ensure a well-rounded evaluation.

Comparing qualitative insights with quantitative performance metrics in usability testing.

Explorative, Assessment, and Comparative Testing

Explorative testing is used early in the design cycle. It helps define the problem space and uncover user needs before any interface is finalized. Sketches, paper prototypes, or wireframes are often used in these sessions to explore possibilities. This method supports creative thinking and ensures that the product vision is rooted in real behavior rather than assumptions.

Assessment testing evaluates a product or feature that’s already been built. It verifies whether users can complete key tasks successfully. This type of testing is often used after updates or during final review. Structured tasks are created to match real-world goals, and both qualitative and quantitative data are collected. Assessment testing ensures that the product is functional, understandable, and aligned with user expectations.

Comparative testing puts multiple designs side by side to see which performs better. Participants may be shown two versions of a page layout, button label, or navigation flow and asked to interact with both. Feedback is gathered on ease of use, satisfaction, and effectiveness. This testing is ideal for decision-making when teams are torn between options or when seeking to validate a redesign against a current model.

Key usability testing types for different product stages.

By understanding these different types of usability testing, teams gain the ability to adapt their approach to the moment. Whether planning a product launch, evaluating a prototype, or refining an experience post-release, there’s always a method available that fits the need. The key is to choose the method that aligns best with the question being asked, then learn from what users show you.