Design Template by Anonymous

Safiya Noble: "Algorithms of Oppression"

Who Is Safiya Noble?

Safiya Noble is a black professor of race and gender studies at UCLA. In 2011, she had a first-hand experience with racism in the Google search algorithm

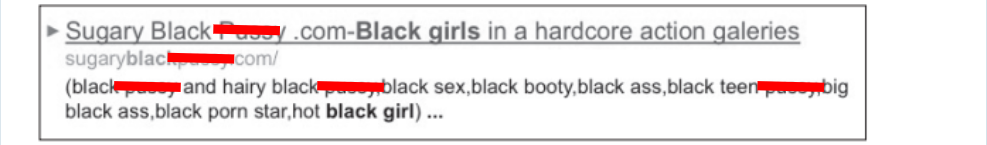

She searched up "black girls" to show her young niece. She had expected this Google search to provide accomplishments, pop culture, or celebrities. Instead, the entire first page was pornographic sites. The Google search algorithm had decided to label "black girls" as a porn category, instead of human beings.

After this experience, Noble wanted to look further into how the Google search algorithm worked.

She found that Google's results for the search "white girls" did not have these same results. Instead, the first page had various links about current accomplishments in the workforce and famous celebrities. Why was this the case? It is not because there is less porn featuring white women; it is because Google had deemed white women as being more than just a porn category.

This racist incident was the catalyst for Noble exploring the Google search algorithm. She wanted to see why this algorithm, which is just code and therefore shouldn't contain biases, could produce such racist results. There were other issues to be found, like Google showing results of just men when the search was "scientist", but Noble wanted to focus in her research on how the algorithm specially misrepresented black women.

Why This Is Harmful

The Google search algorithm misrepresents black women and upholds harmful stereotypes. The cover art on her book shows a search autofill

from 2011, which fills the search "Why are black women so" with these stereotypes.

With so much information readily available, people don't want to take extra time to find what they need. So when they see these autofills, like in Figure 2, or these top links, like in Figure 1, they reinforce their own stereotypes. The average scroller might see these and think that since Google is a massive "search company", and this is the information their algorithm is choosing to display, then it must be true.

But we cannot see this issue and think how it will negatively affect the average Google user. We also must acknowledge how it affects the identity that it harms: black women. Having these stereotypes portrayed in such a public setting as Google is demeaning and dehumanizing. It's completely unacceptable for Google to be promoting this misinformation. Google is practically declaring that it is alright for individuals to be searching these hateful things, which puts down black women even more.

Extreme Example: Dylann Roof

In 2017, Dylann Roof searched "Black on white crime", and the first link to show was from a white supremacist site. It was filled with racist misinformation. Instead of the first link being a site about all the misconceptions about black on white crime, or how it's much less common than people think, it was a site that reinforced harmful beliefs. As a result, Roof turned into a white supremacist and would go on to open fire in a predominately black church, killing 9 patrons.

This is an extreme example, but it's important to show that there are real-world consequences for enforcing stereotypes on the Google search page. Roof declared that before this event, he was a normal citizen free of extremist ideas. But having these types of hateful sites so readily available changed his mindset.